"rex" is the short form of "regular expression". # Edit 2 - little editings - 24th May 2020

SPLUNK REX FREE

Book a free consultation today, our team of experts is ready to help.# to understand the basic differences between rex and regex EOD is designed to answer your team’s daily questions and breakthrough stubborn roadblocks. Small, day-to-day optimizations of your environment can make all the difference in how you understand and use the data in your Splunk environment to manage all the work on your plate.Ĭue Expertise on Demand, a service that can help with those Splunk issues and improvements to scale. But, you don’t have to master Splunk by yourself in order to get the most value out of it. Start Using Field Extractions, Rex, and Erex CommandsĪ ton of incredible work can be done with your data in Splunk including extracting and manipulating fields in your data. Unlike Splunk’s rex and regex commands, erex does not require knowledge of Regex, and instead allows a user to define examples and counter-examples of the data that needs to be matched.Įrex Command Syntax | erex examples="" counterexamples="" Erex Command Syntax Example | erex Port_Used examples=”Port 8000, Port 3182” The erex command allows users to generate regular expressions. In order to define what your new field name will be called in Splunk, use the following syntax: | rex (?”regex”) What is the Erex Command? This new field will appear in the field sidebar on the Search and Reporting app to be utilized like any other extracted field. The Rex command can be used to create a new field out of any existing field which you have previously defined. You can use the Rex and Erex commands to do this.

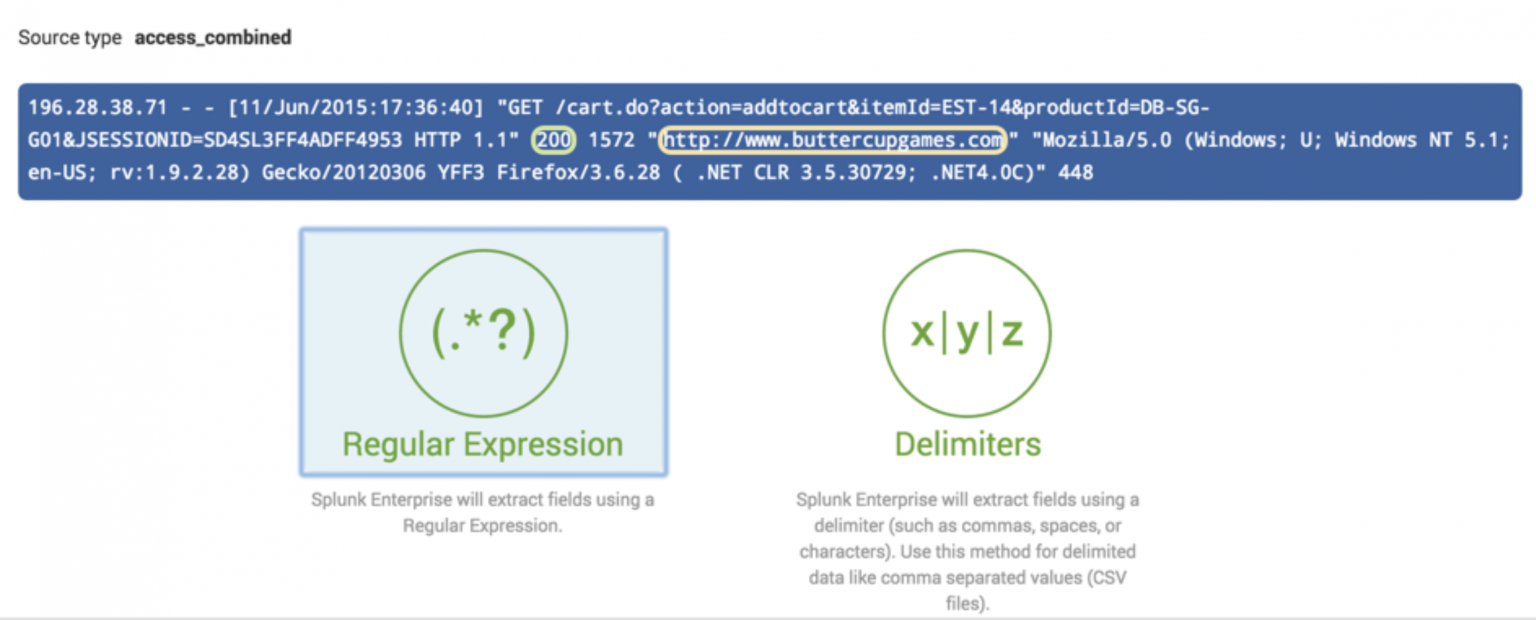

What’s Next? Rex and Erex Commands What are Rex and Erex Commands?Īfter extracting fields, you may find that some fields contain specific data you would like to manipulate, use for calculations, or display by themselves. After saving, you will be able to search upon these fields, perform mathematical operations, and advanced SPL commands. Step 4: You can choose to rename all fields parsed by the selected delimiter. Step 3: If you have selected a delimiter to separate your fields, Splunk will automatically create a tabular view in order to allow you to see what all events properly parsed would look like compared to its _raw data pictured above. Figure 3 – Regular expressions vs delimiter in Splunkįigure 4 – Delimiter in Splunk’s Field Extractor Delimiters are characters used to separate values such as commas, pipes, tabs, and colons. Step 2: From here, you have two options: use a regular expression to separate patterns in your event data into fields, and the ability to separate fields by delimiter. The image below demonstrates this feature of Splunk’s Field Extractor in the GUI, after selecting an event from the sample data.įigure 2 – Sample file in Splunk’s Field Extractor in the GUI After clicking, a sample of the file is presented for you to define from events the data. Step 1: Within the Search and Reporting App, users will see this button available upon search. Pictured above is one of Splunk’s solutions to extracting searchable fields out of your data via Splunk Web.

SPLUNK REX HOW TO

How to Perform a Field Extraction Figure 1 – Extracting searchable fields via Splunk Web Field extractions allow you to organize your data in a way that lets you see the results you’re looking for. Field Extraction via the GUIįield extractions in Splunk are the function and result of extracting fields from your event data for both default and custom fields. This enables you to gain more insights from your data so you and other stakeholders can use it to make informed decisions about the business. What is a field extraction?Ī field extraction enables you to extract additional fields out of your data sources. This is where field extraction comes in handy. You may be wondering how to parse and perform advanced search commands using fields. If the data is not already separated into events, doing so may seem like an uphill battle. The large blocks of unseparated data that are produced when it’s ingested are hard to read and unable to be searched. After uploading a CSV, monitoring a log file, or forwarding data for indexing, more often than not, the data does not look the way you’d expect it to.

0 kommentar(er)

0 kommentar(er)